本实验的Faster RCNN 源代码实现来自tensorflow/models 的 object_detection API ;在COCO2014数据集上完成训练和测试,本教程由我、胡保林大神以及韩博士在win10和Ubuntu16.04上完成并测试通过。

1. 环境和依赖准备

Tensorflow Object Detection API 根据官方安装指示安装下载安装:

1

2

3

4

5

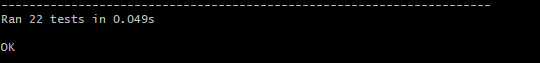

6最后的测试代码: python object_detection/builders/model_builder_test.py

如果返回下图结果,表示上述API安装成功、

如果安装失败,看代码报错debug.

注意:将 object_detection 和 slim 配置到环境变量中,否则会出现 没有这俩个模块的报错

win10:

可以在上述俩个文件夹下运行: python setup.py install

完整环境配置完成测试结果 下载 COCO2014数据集. 保持下列数据存储:

1

2

3

4

5

6

7

8COCO/DIR/

annotations/

instances_train2014.json

instances_val2014.json

train2014/

COCO_train2014_*.jpg

val2014/

COCO_val2014_*.jpg

2. Object Detection API部分代码用法

1. 创建COCO或者VOC格式的TFRecord文件

object detection/dataset_tools中包含创建TFRecord的python程序。以创建COCO2014为例:

1 | python object detection/dataset_tools/create_coco_tf_record.py --logtostderr \ |

2.训练Faster RCNN Nasnet

- 下载预训练模型和修改config文件

1 | 1. 类别,迭代数,预训练模型路径,tfrecord的路径。(注意:如果只有11G显存,请把config中图像尺寸改成600*600以下) |

输入训练命令(根目录:research文件夹)

1

2

3

4

5python object_detection/legacy/train.py --logtostderr \

--train_dir=object_detection/data/coco/ \

--pipeline_config_path=object_detection/samples/configs/faster_rcnn_nas_coco.config

#train_dir---训练数据路径

#pipeline_config_path---config 路径

训练过程截图 模型评估(根目录同上)

1

2

3注意:

1. legacy/evaluator.py 第58行改为 :EVAL_DEFAULT_METRIC = 'coco_detection_metrics'

2. utils/object_detection_evaluation.py 如果用python3的话将 unicode 改成str1

2

3

4

5

6python object_detection/legacy/eval.py

--logtostderr

--checkpoint_dir=object_detection/faster_rcnn_nas_coco_2018_01_28

--eval_dir=object_detection/data/coco/

--pipeline_config_path=object_detection/samples/configs/faster_rcnn_nas_coco.config

--run_once True

测试结果–简略版

3. 在VOC上微调

- 构建VOC TFRcord格式数据

1 | python object_detection/dataset_tools/create_pascal_tf_record.py \ |

重新写一个object_detection/samples/configs/faster_rcnn_nas_voc.config 文件

在 fine_tune_checkpoint:设置为COCO训练好的模型权重路径。

测试方法同上

4. Faster RCNN Nasnet demo

1 | 见object_detection_tutorial.py 该文件需要在object detection目录下或者你把里面的路径设置正确 |

1 |

|